.avif)

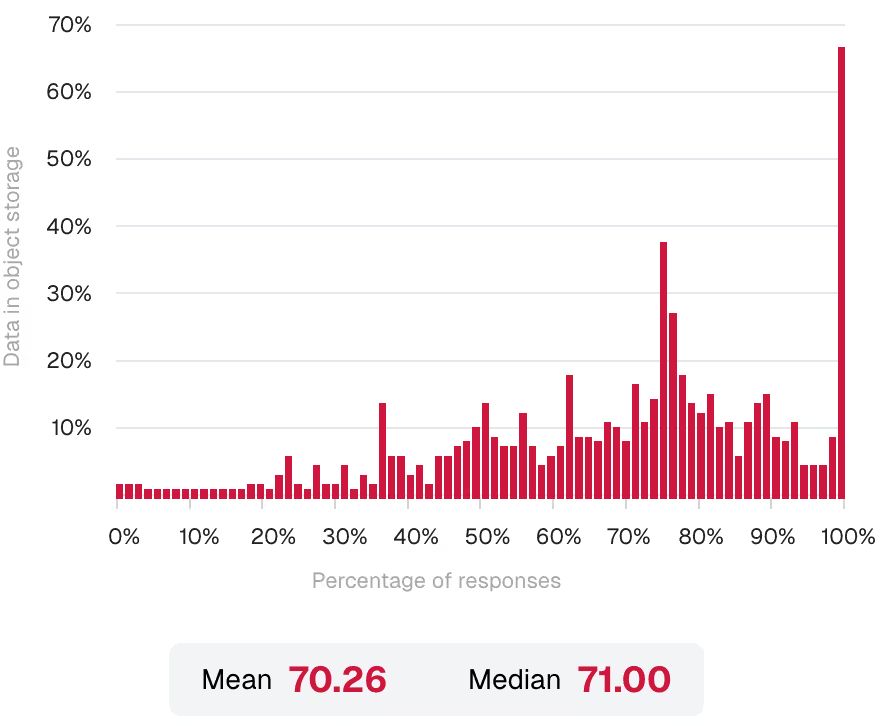

Think about all the data your organization has in cloud native storage TODAY. To the best of your knowledge, what percentage of that data is in object storage?

AI has fundamentally altered the requirements around scale, around performance, and around performance at scale. Where enterprise organizations once depended on traditional SAN/NAS architectures, the explosive growth of unstructured data— now measured in petabytes— has made one thing clear: object storage has become the dominant technology for enterprise storage needs.

MinIO and UserEvidence surveyed more than 600 IT and software development leaders on storage, AI, workloads, and infrastructure. The breakdown of the audience and their responsibilities can be found in the Methodology section at the end of this report.

The most definitive finding in the data was how much of the enterprise’s data resides on object storage. IT leaders indicated that more than 70% of the enterprises' cloud-native data was in object storage. That is an overwhelming number— and it is only expected to grow.

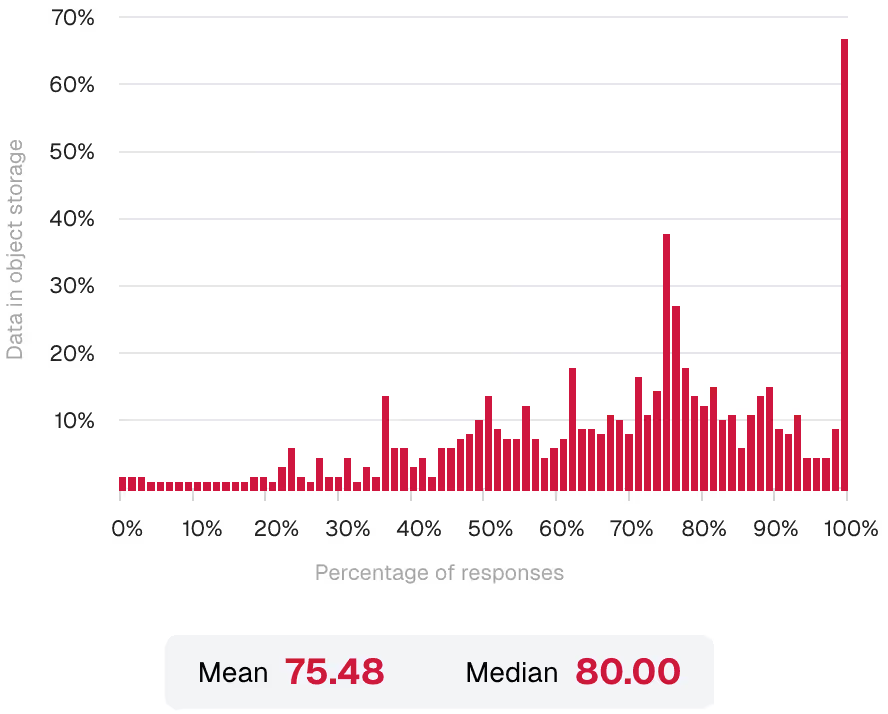

Looking ahead, enterprise leaders expect to grow their investment, predicting that 75% of their cloud-native data will be in object storage two years from now.

The growth of AI and AI-oriented data lakehouses will only accelerate this trend in the coming years, making object storage the defining storage technology for the foreseeable future.

This report outlines how AI is remaking the storage landscape, where enterprises are running their object stores, and what features and functionality they deem most critical from their object stores.

Think about how data storage at your organization is evolving. What is your best guess about what percentage of their data will be in object storage TWO YEARS FROM TODAY?

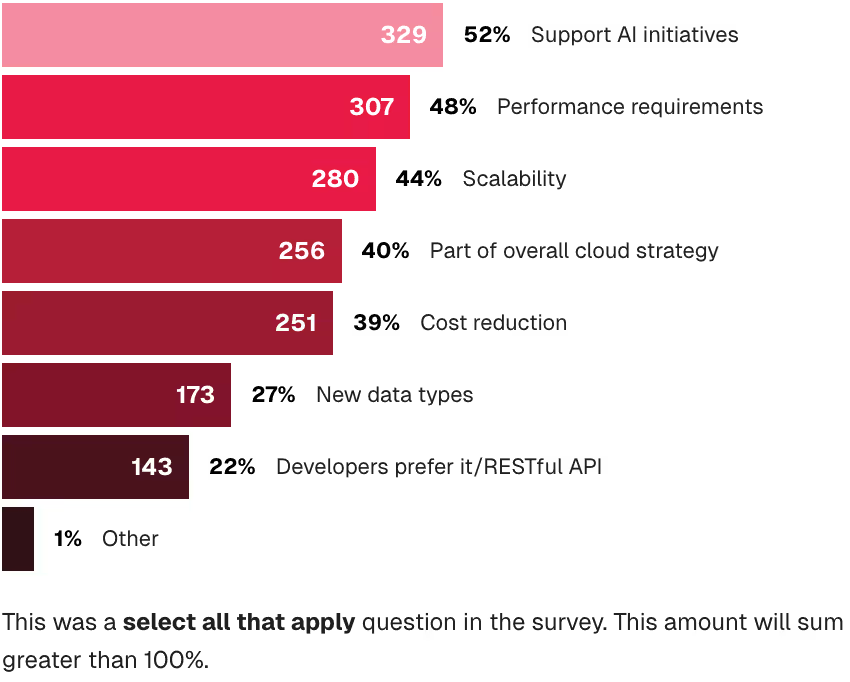

What are the top three business or technology factors motivating your organization's adoption of object storage (public or private cloud)? Choose up to three.

With object storage holding such a commanding position in the enterprise, we asked IT leaders to tell us what motivated the adoption of object storage and what workloads leverage those object stores.

The top three responses are interlocking concepts— AI initiatives require performance at scale. It is easy to be performant at 1 petabyte; it is very different to be performant at 100 petabytes. This is what AI workloads require.

Given the importance of this question, we asked it slightly differently, getting essentially the same response.

What workloads use object storage? Choose all that apply.

Workloads like cybersecurity anomaly detection require massive ongoing scale and throughput for high-speed analytics. Most vendors recommend splitting architecture into smaller namespaces because they cannot handle a single massive exascale namespace. But such workloads are typical for object storage solutions.

AI requires performance and scale, which object storage delivers — that's why, going forward, object storage will continue to win.

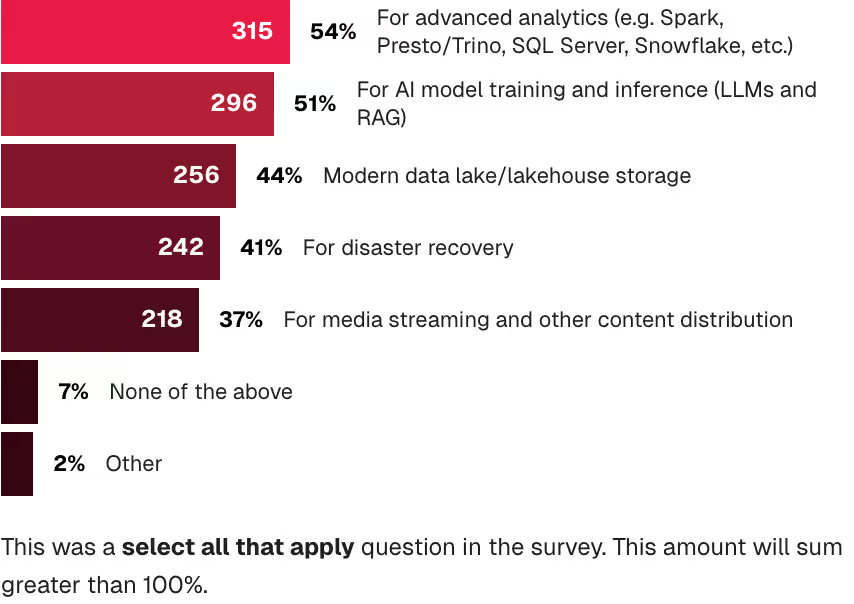

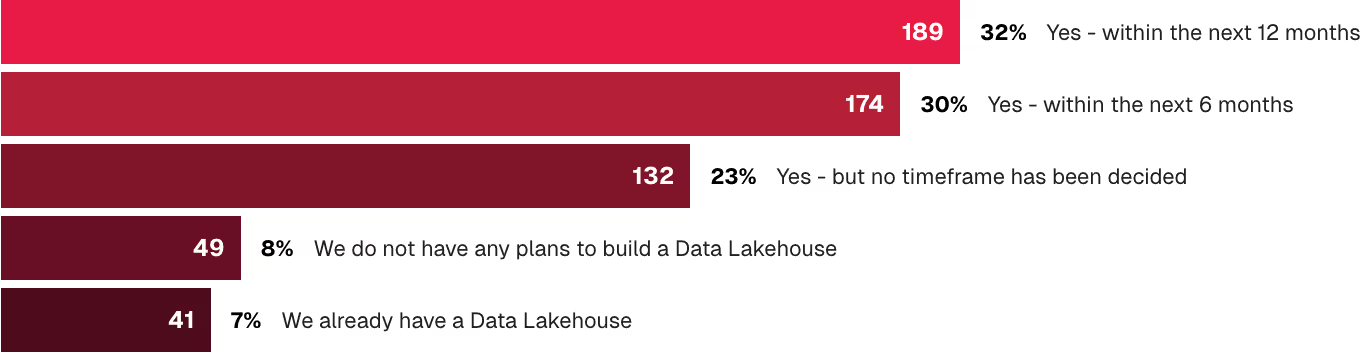

Given that data lakehouses are exclusively built on object stores and 71% of respondents already have or plan to build a data lakehouse in the next 12 months, this is a strong (and likely growing) object storage priority.

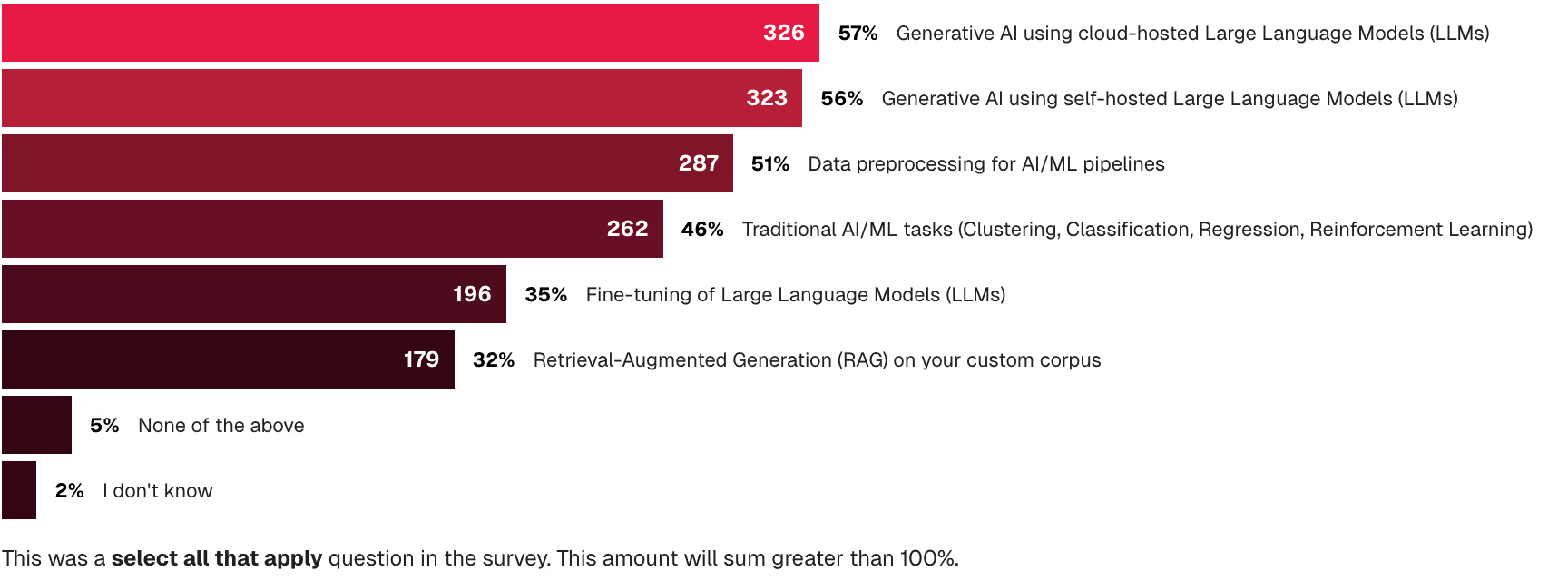

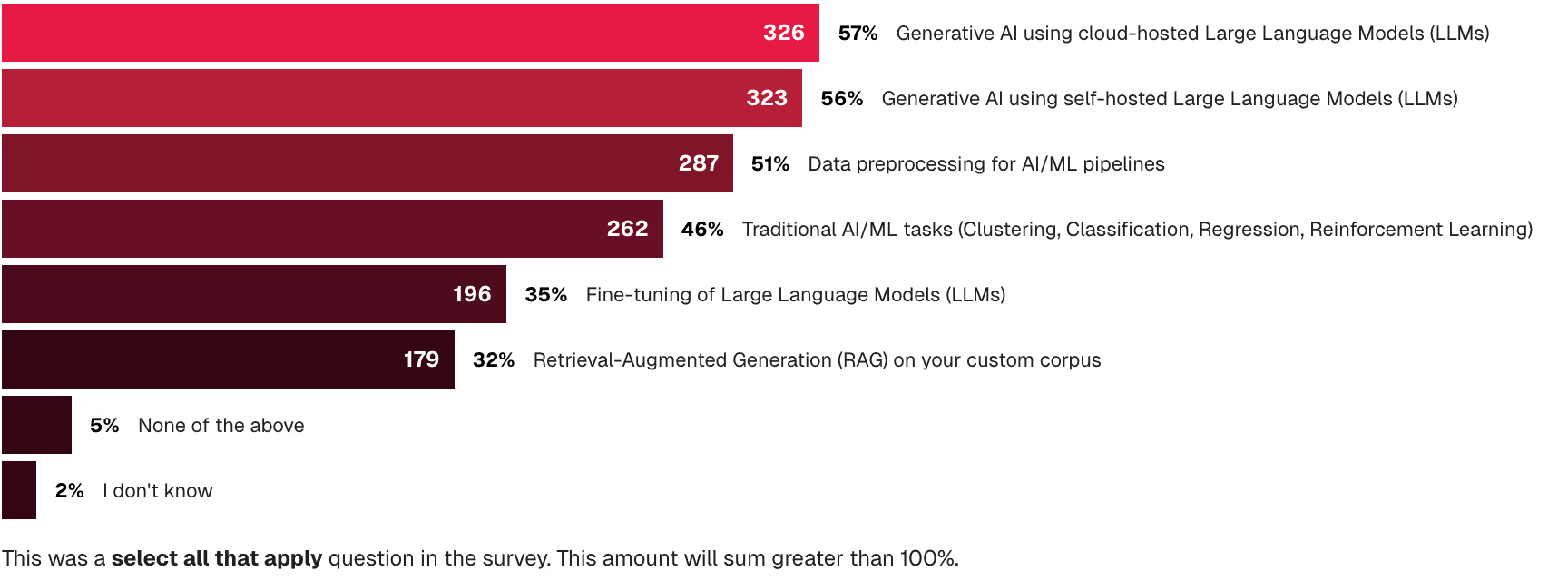

All three of these use cases carry significant potential for industry transformation. But from the meteoric rise of generative AI to the continued importance of traditional forms of AI, these AI workloads are currently the most powerful force behind new adoption of object storage.

Modern object storage delivers the attributes needed for workloads as demanding as AI/ML—attributes like performance, scalability, and security. That's why developers are turning to object storage to store a wide range of data sets to train models, fine-tune models, and build vector databases for generative AI. As the number of AI use cases grow so will the datasets, models, and vector DBs.

What types of training sets does your organization send to object storage for AI analysis? Choose all that apply.

We asked IT leaders what types of training sets their organization sends to object storage for AI analysis, and the responses ranged across 10 different types of data, from geospatial to application data. The importance of application data was interesting. It is a fairly broad category, so there may be limited things to read into it, but it does tell us that IT leaders are looking at generating business value from core business data.

What types of AI workloads does your organization currently run or plan to run on your object store? Choose all that apply.

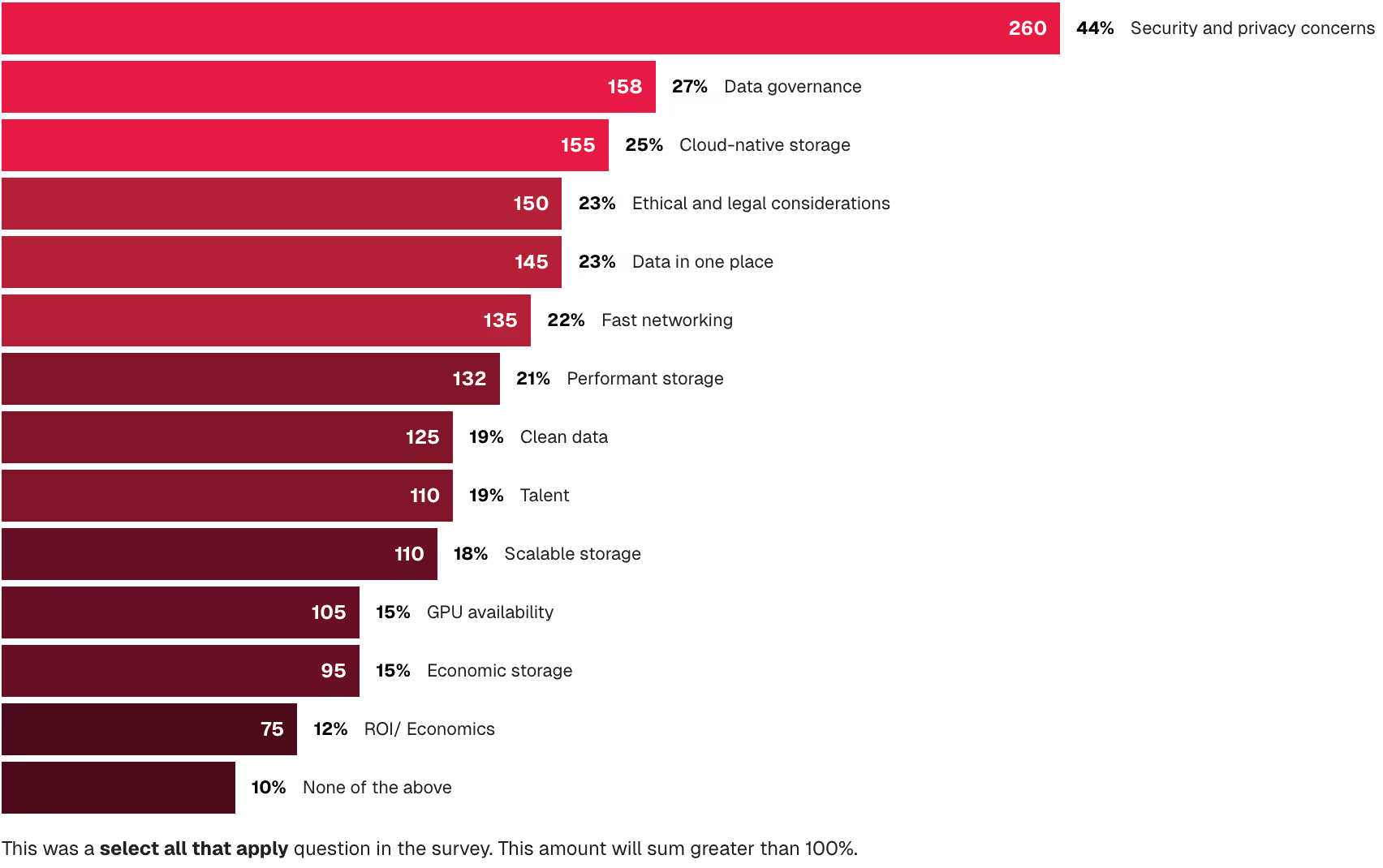

What are the top three most challenging elements of AI for your organization? Choose up to three.

Respondents reported that their top three most challenging elements of AI are:

This challenge ranked at the top of IT leaders' list by a wide margin. Where AI models present a wide swath of question marks about how data is handled and protected, enterprises want control over their data. They're worried about data leakage from public cloud providers and don't want to compromise their competitive advantage. When it comes to AI, they don't want to share or send the data to the mainstream LLMs just for inference — ideally, they keep everything in-house. There is a balance to be achieved here. Public clouds have plenty of GPUs and time to insight matters, but our data indicates a preference for the private cloud (it over indexes on the location of GenAI workloads vs. current workload location).

Like the security concerns, AI data governance is all about control. Enterprise leaders need to understand what is in their data and closely supervise who has access to the data. For example, when AI or ML engineers need to pull raw data from a private cloud and push it to a public cloud provider like AWS or Google Cloud to fine-tune an AI model (and then send the data back to the private cloud), the data should be encrypted in transit and at rest. Furthermore, both private cloud and public cloud should integrate with a common identity provider for authentication and authorization. Strong data governance needs robust access control and records of who accessed it and how it changed.

Cloud-native storage offers support for enterprise AI needs like containerization, orchestration, RESTful APIs, and microservices. Cloud-native storage is software-defined and standards-based, bringing a massive eco-system that is ready to go, right out of the box. Hardware-defined SAN/NAS technologies are ill-suited for the cloud-native world — after all, you can' t containerize an appliance.

We also asked these IT leaders to tell us how they are leveraging those training sets - specifically for AI workloads.

What types of AI workloads does your organization currently run or plan to run on your object store? Choose all that apply.

What workloads use object storage? Choose all that apply.

As we see from the research, traditional AI continues to deliver value to the organization. Another area where IT leaders see significant investment is in their data lakehouse architectures. When we asked respondents what workloads use object storage, we find that advanced analytics is the #1 response and modern data lake/lakehouses is the #3 most selected response.

Many of those advanced analytics workloads are built on a data lake/lakehouse architecture–making them a critical on-ramp for more sophisticated AI workloads. More importantly, those workloads are blurring the lines between “advanced analytics” as recent announcements from Snowflake, Databricks, Dremio, Starburst, Athena, and BigQuery demonstrate. It should be noted that every one of those products is built on an object store.

Do you have plans to build a Data Lakehouse with object storage in the near future?

The cloud is an operating model, not a location. When adopted correctly, leveraging the core pillars of containerization, orchestration, RESTful APIs (S3), microservices, and software-defined infrastructure, the entire stack – and therefore the data that resides on it – becomes portable.

The public cloud was built on object storage. You see this in the success of AWS S3, Azure Blob Storage, and Google Cloud Storage. They are the primary storage technologies on those platforms and they are all object storage.

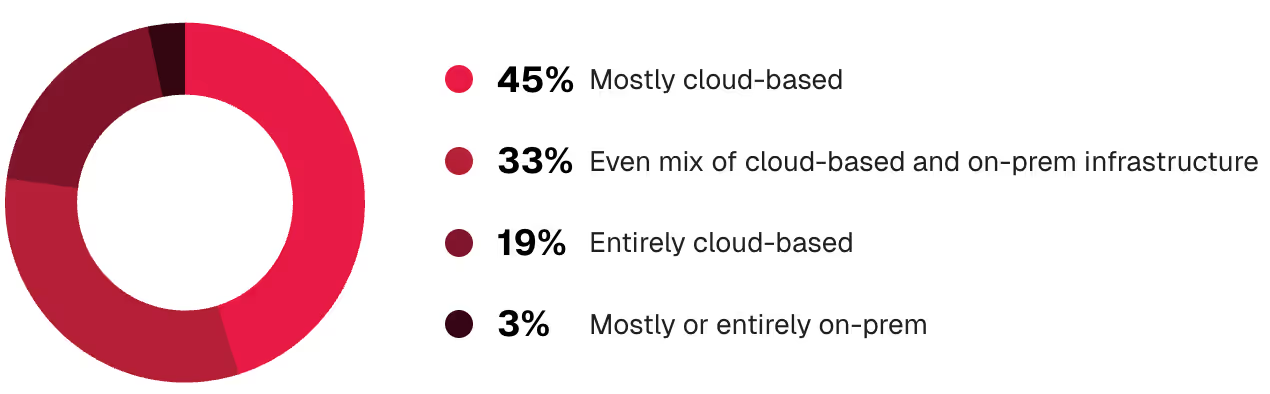

Accordingly, it is unsurprising to see that the public cloud leads when asked about cloud infrastructure.

How would you describe your organization's overall adoption of cloud infrastructure?

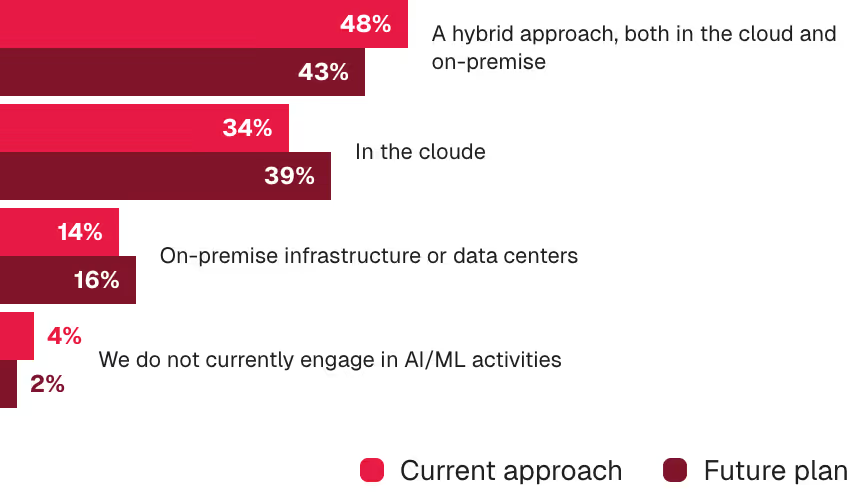

What is your organization's approach to running AI/ML workloads?

Currently, the largest group of respondents (48%) is taking a hybrid approach to running AI/ML workloads that are both in the public and private cloud. This is a data point to track going forward — economics will likely shift the balance sooner rather than later. Enterprise cost concerns are increasingly making the public cloud a less viable option for AI/ML, which is already driving both more repatriation and more private cloud usage.

Finally, lest we fall into the trap of thinking that enterprises deal in absolutes with regard to the cloud, we asked the respondents to identify how many clouds they utilize. The answer is in line with other research – which is to say the modern enterprise is a multi-cloud enterprise.

Modern object storage offers a versatile solution to support diverse use cases for enterprise organizations— well beyond the archival and backup purposes it was known for in the past. As enterprises have expanded their reliance on object storage, their expectations of object store capabilities have risen as well.

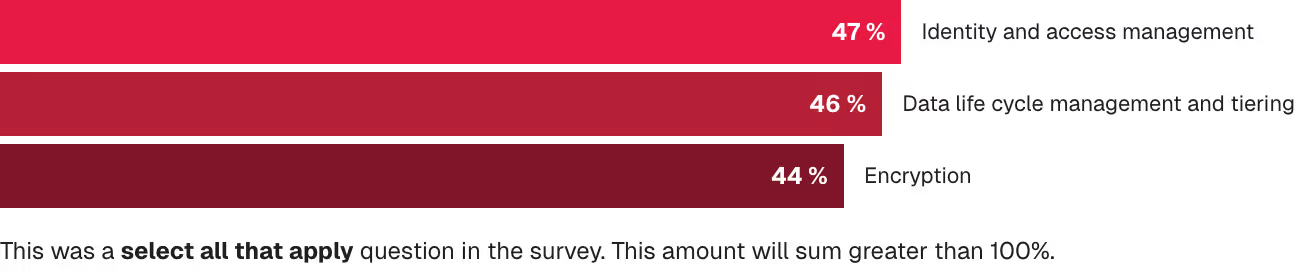

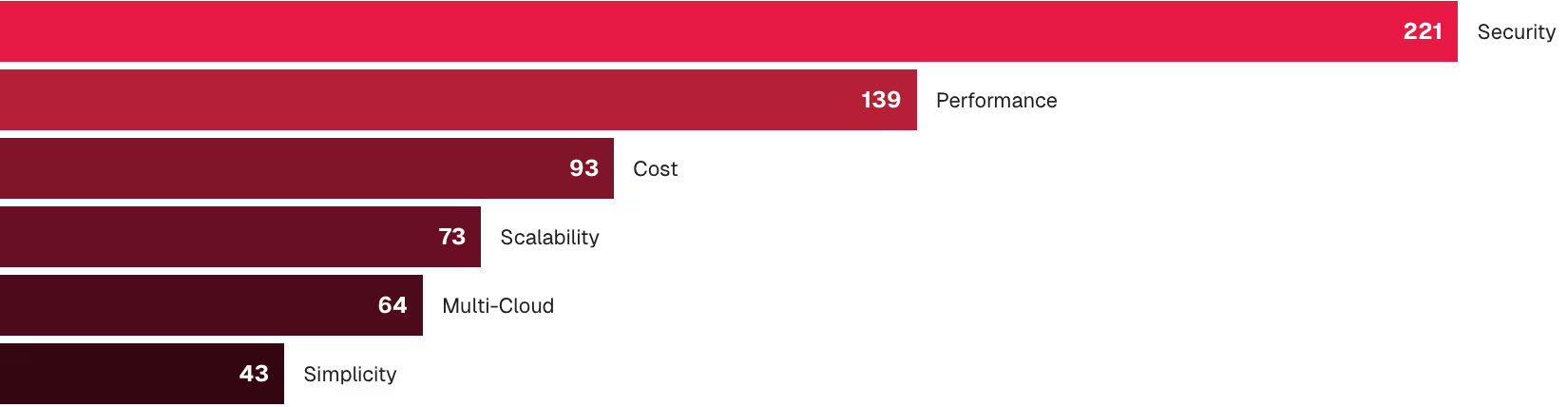

Our research found that the top three most important capabilities of an object store— public or private— are:

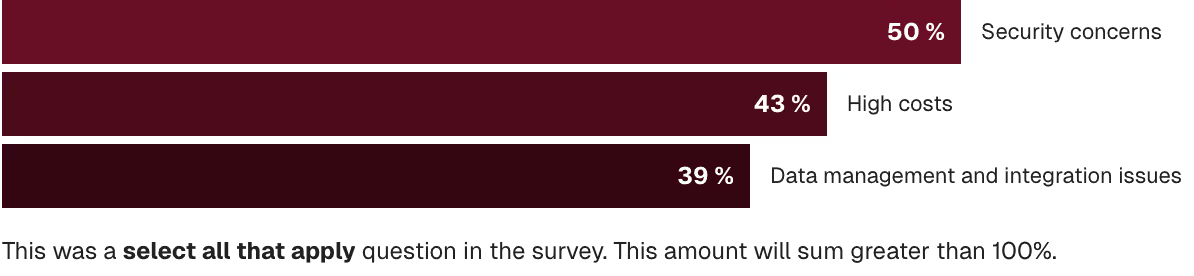

While not in the top three, performance issues (37%) and scaling problems (31%) also made a strong showing as private cloud challenges.

Aside from cost considerations (more on that below), these results reveal three key factors driving the selection of storage technology and ultimately for object storage in particular:

We would be surprised if security weren't a key concern and capability, regardless of the storage architecture. Today, encryption at rest and encryption in transit are table stakes; sophistication is a requirement. Integration with well-known identity providers for authentication and authorization makes integrating object stores into existing data centers easy.

But one critical component of security is its simplicity. Complexity is its own attack surface. The greater the complexity, the more vulnerable the system is. Object storage that offers security with simplicity allows enterprises the flexibility to move their data to another cloud or private cloud provider, or to embrace a new solution without compromising their data.

Cost still matters when choosing the right storage solution— object or otherwise. On its own, cost isn't the key value driver for object storage. But it can distinguish one solution from another, especially when comparing the private versus public cloud.

The data tells two stories on the cost front, both of which should be familiar to IT leaders. The first is that the private cloud offers better economics than the public cloud. The rise of generative AI has already produced massive public cloud costs. Nearly 40% of respondents said that they are “very” or “extremely” concerned about the costs of running their AI/ML workloads in the cloud, with another 29% citing moderate concerns. Beyond a certain scale, accessing data in the public cloud, particularly to train and fine-tune AI models, is increasingly difficult and expensive. Year-over-year cloud spending has risen 30% on average, and a report by Tangoe found that 72% of IT leaders say cloud spending is unmanageable.

We have a lot of data - and it turns out that storage in the cloud is one of the most expensive things you can do in the cloud,

followed by AI in the cloud…

read more

— Rebecca Weekly, VP of Platform and Infrastructure Engineering, GEICO

Enterprises are eager not to repeat the mistakes they made previously by racking up astronomical cloud storage costs— which, as we know, gave rise to the entire Cloud FinOps industry. As both data storage and computational costs explode due to AI workloads, the public cloud is becoming a less viable option, all driving back to private cloud usage or even private cloud repatriation. Public clouds have plenty of GPUs and are a great place to run smaller experiments to learn. In production, enterprises need control and simplicity.

The second story the data tells is that in the private cloud, the appliance model is sub-optimal from a cost perspective. Forty-three percent of respondents cited cost concerns as the second biggest challenge of a private cloud approach to object storage. Enterprises are tired of the appliance model, which creates vendor lock-in and reduces optionality. Instead, they want smart software, dumb hardware, and pricing to match what their deployment calls for.

Software-defined storage offers the flexibility, control, and leverage enterprises are looking for— that's why it's the architecture of choice now and going forward. However, plenty of organizations are choosing to repatriate certain workloads to private cloud infrastructures because of the cost benefits.

As noted, the cloud is an operating model, not a location. Storage that aligns with that is cloud-native storage. Storage that doesn’t is generally an appliance.

Four out of 10 IT leaders are struggling with data management and integration issues with their private cloud object storage. The reason is likely that they have adopted an appliance. You can’t containerize an appliance. It isn’t cloud native.

Appliance-based storage operates as a fixed, hardware-centric solution that is inherently limited by its physical constraints and static architecture. It lacks the flexibility to integrate seamlessly with containerized applications and modern cloud-native workflows. Appliances often require manual scaling, forcing organizations to overprovision storage, resulting in inefficiencies and higher costs. Additionally, their reliance on proprietary hardware makes them unsuitable for environments that demand agility, scalability, and distributed operations.

In contrast, software-defined, cloud-native object storage embodies the principles of the cloud operating model. It is decoupled from specific hardware, enabling deployment on any infrastructure, whether on-premises, in the public cloud, or in hybrid setups. Cloud-native object storage scales elastically, matching capacity with demand, and integrates seamlessly with Kubernetes and other orchestration tools. This model also leverages APIs for automation and programmability, empowering IT teams to manage large-scale, distributed data environments efficiently.

These factors drive selection criteria and when we asked these IT leaders to stack rank the most important factors in selecting an object store (public or private) they provided the following responses:

In your opinion, what are the most important factors when selecting an object store (public or private cloud)? Rank the following from 1 to 6 where 1 = MOST important and 6 = LEAST important.

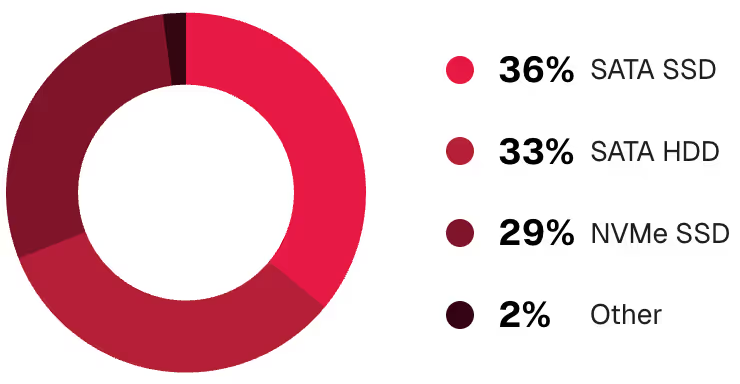

Operational details matter. We asked our respondents to share how their storage systems are architected, the hardware behind them, and the teams primarily responsible for data storage. Here is some of that data.

Scalability has always been one of the driving forces behind the rise of object storage. Now, with ever-increasing demands for flexibility in storing and retrieving massive amounts of data for AI and analytics, enterprises need higher performance at a greater scale than ever before. Across infrastructures, object storage delivers the capacity, performance, and simplicity to scale enterprise data to new heights.

Our research found that nearly 30% of cloud-based object storage deployments are at least 10 PB in size. This is the point at which cracks begin to appear for most technologies. This is where legacy APIs like POSIX become too chatty or third-party metadata databases fail. More importantly, every projection in the market indicates that enterprise data is growing at a CAGR of more than 60%.

That means the typical object storage cluster will increase in size. This is due to the continued proliferation of unstructured data (estimated at 55-65% annual growth) for training both traditional and generative AI models and an overall increase in object storage usage across applications. The cloud object storage market is also on track to nearly triple in size from $6.5B in 2023 to $18B by 2031, indicating wider adoption and larger deployments.

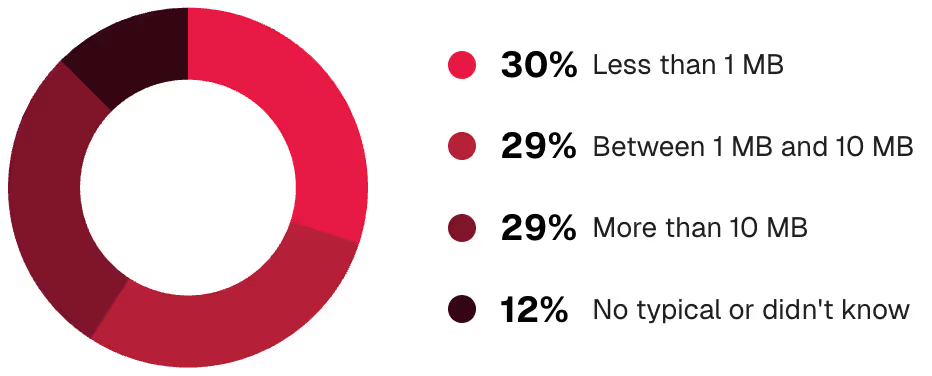

The size of the object also makes a difference. The ability to perform well with small objects is a distinguishing characteristic for elite object stores. Having said that, most objects are in the less than 10 MB range.

What is the size of a typical object in your organization’s object store(s)?

What is the size of a typical object in your organization’

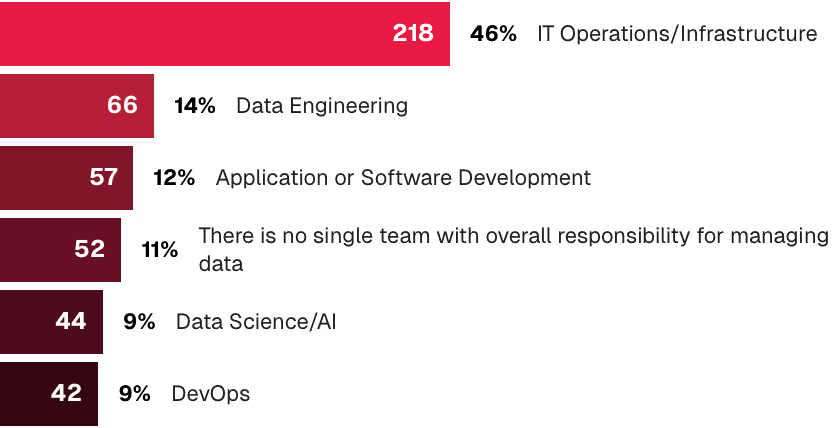

What team has PRIMARY responsibility for managing data storage at your organization?

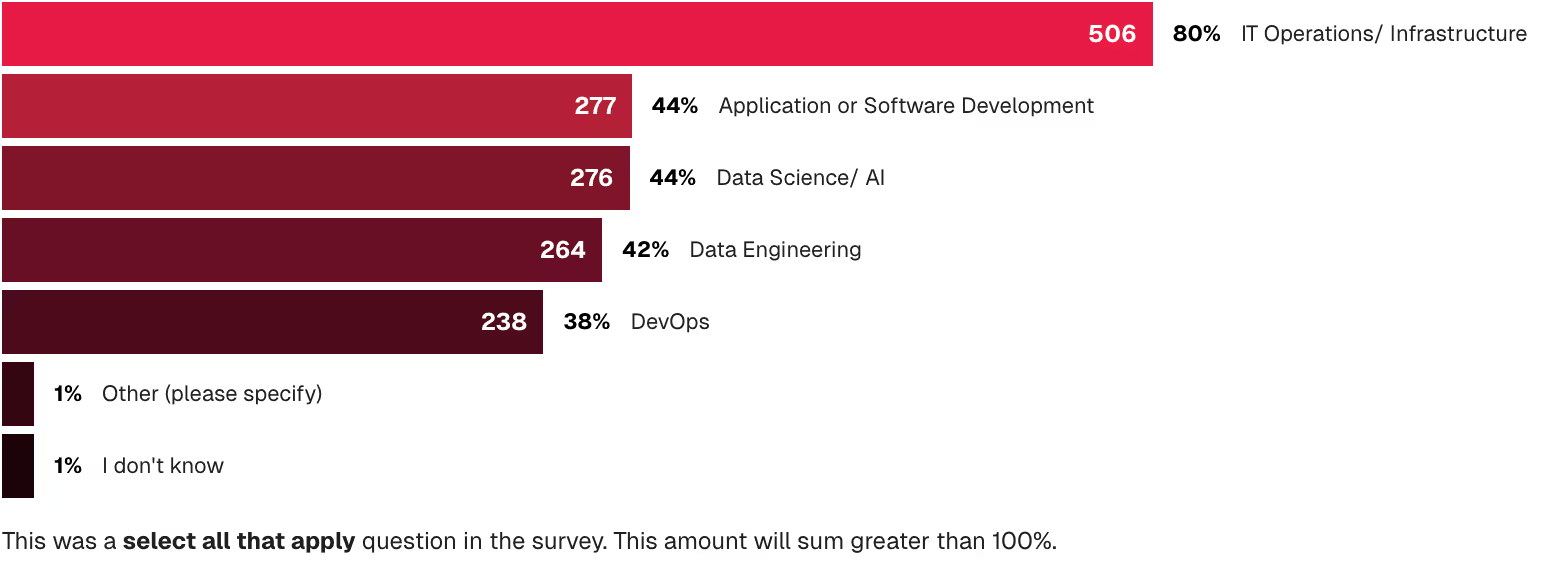

The storage team dynamic is an area of interest. While IT Operations/Infrastructure is clearly the leader, it is by no means the only player in the management of data storage. There are multiple teams that are engaged - which makes sense given that data is a core asset of almost every organization at this point.

What team(s) are involved in managing storage at your organization? Choose all that apply.

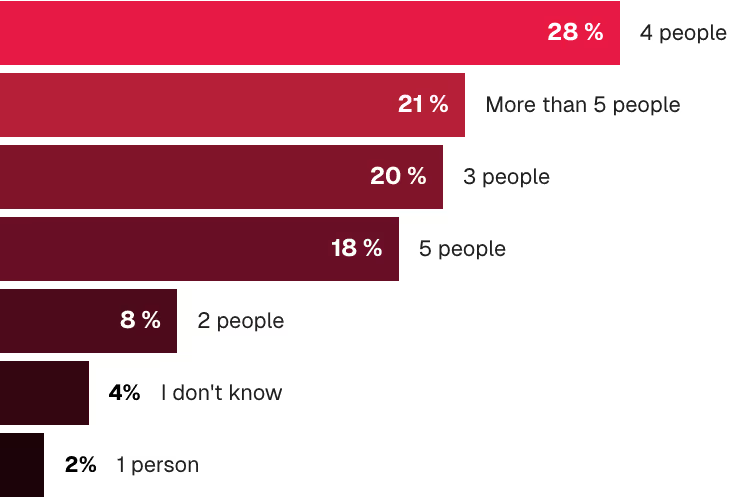

In your experience, what size team is required to manage a PB of data?

Speaking of roles to play, it takes a full team to manage a PB of data. At the surveyed organizations, more than two-thirds of the respondents indicated four or more FTEs were required to manage a PB of data.

In 2024, most enterprise data is already on object storage— and IT and data leaders expect that to continue to grow. The two key reasons for this dominance are clear:

Object Storage isn't just supporting but driving AI by meeting security needs, mitigating costs and scalability issues, and supporting high performance across workloads.

Enterprises are prioritizing performance at scale for their data storage, perhaps above all else. They want the kind of flexibility and simplicity that legacy SAN/NAS technologies struggle to deliver in the cloud-native world.

Climbing public cloud costs aren't sustainable— but the cost is clearly secondary to the types of workloads and the capacity to scale. We can assume that, forced to choose between cost and capabilities, enterprises would zero in on the latter. But of all the available solutions, software-defined storage run on the private cloud is most likely to let organizations have it all.