A vector database is a specialty database used to store, index, and query these vectors. Vectors are numerical representations of unstructured data, such as text, images, or audio. In this lesson, we used text to demonstrate vectors and the use of a vector database, but other forms of unstructured data can be vectorized and the resulting vectors stored in a vector database. This allows for efficient semantic searches, meaning you can find items that are semantically similar to a given query by measuring the distance between their vector representations. Vector databases are designed to handle large amounts of high-dimensional data, which can be used to search based on semantic meaning rather than just exact keyword matches.

To truly understand vector databases, we need to understand vectors, how vectors are used to represent data in generative AI, and semantic searches. Once we understand these basic concepts, we can define a vector database.

A vector is an array of numbers that represents a direction and magnitude in some n-dimensional space. For computer science professionals, vectors are simple lists, tuples, or tensors. It is important to note that they are not scalars or matrices.

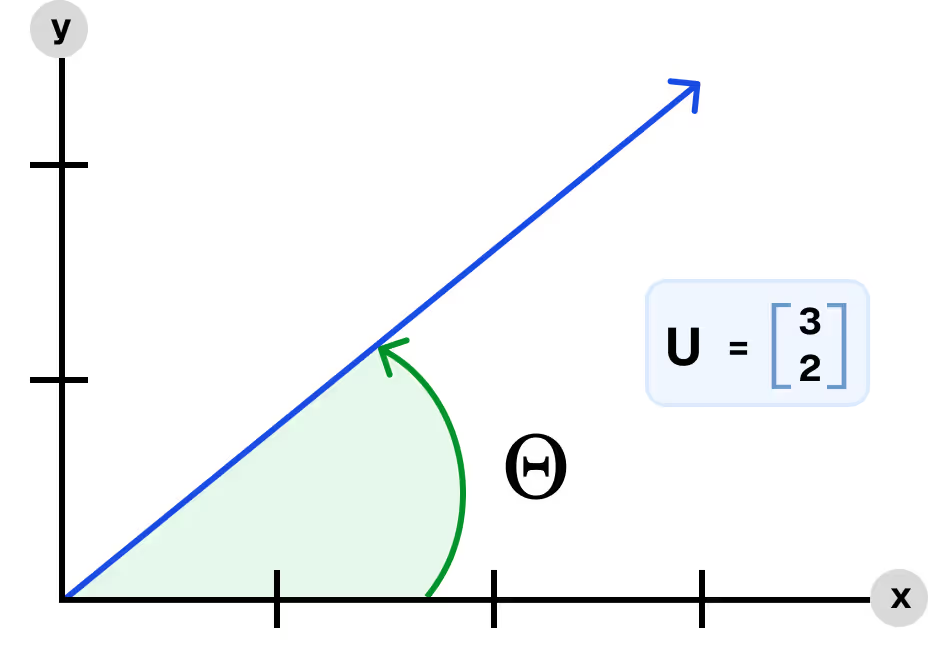

The simplest way to demonstrate a vector and its properties is to create and graph a two-dimensional vector because it can be easily visualized on a two-dimensional computer screen. Consider the following vector:

u = (3, 2)

Mathematicians often prefer to write vectors vertically, but this approach wastes a lot of paper when the number of dimensions increases, which, as we will see, happens with generative AI.

If we graph the vector above, then it would look like the graph below. The x value is the first number, and the y value is the second number. Note that it is a convention to draw vectors as arrows since they represent quantities that have both direction and magnitude.

The magnitude of a vector is the length of the arrow shown above. The direction is the angle of the arrow with respect to the x-axis. These values can be calculated using trigonometry and geometry, respectively, but we will not do that here because that is what a vector database will do for us.

As stated previously, the example above is a two-dimensional vector because it has two numbers. If it had three numbers, then it would be a three-dimensional vector. The human mind can also visualize a three-dimensional vector. However, where things get weird is when we go beyond three dimensions. It is impossible to create a visualization of a four-dimensional vector; however, all the same rules apply: it still has a magnitude and a direction. The math to calculate the direction and magnitude becomes increasingly complicated as we add more dimensions. Luckily, the advances in GPUs make these calculations doable at scale. Finally, in generative AI, it is common to use vectors that have thousands of dimensions. ChatGPT’s largest model uses 3072 dimensions. Why does generative AI need so many dimensions? The more dimensions a vector contains, the more information it can hold. But what kind of information does a vector contain in generative AI? Let’s address that next.

All models, whether they are small models built with Scikit-Learn, neural networks built with PyTorch or TensorFlow, or large language models (LLMs) based on the transformer architecture, require numbers as inputs and produce numbers as outputs. For generative AI, this simple fact means text must somehow be converted into some numeric representation. The numeric representation used by generative AI is vectors. A vector can represent an individual word, an entire document, or a chunk of a document.

A generative AI solution typically requires access to private documents containing your company's proprietary knowledge to enhance the answers produced by LLMs. This enhancement could take the form of retrieval augmented generation (RAG) or LLM fine-tuning. Both of these workloads require your documents to be converted to vectors via an embedding model. (Embedding models are special models that are used to convert text to vectors. How embedding models perform this conversion is beyond the scope of this lesson. For now, all we need to know is that they transform text to vectors.) Fine-tuning requires each word in a document to be converted to a vector, whereas RAG typically handles document chunks. Words with similar meanings will have vectors that have similar magnitude and direction. The same is true for document chunks and entire documents; if they are about the same topic, they will have similar vectors.

To better understand the benefits of vectors, let’s look at a conventional text search that does not use vectors.

Let’s say you want to find all documents that discuss anything related to “artificial intelligence”. To do this with a conventional database where the document text is stored in a text field, you would need to search for every possible abbreviation, synonym, and related term of “artificial intelligence.” Your query would look something like this:

This is a lexical text search, which is arduous and prone to error, as it requires an engineer to identify all possible synonyms and abbreviations of the search term. Most importantly, this is not a semantic search. It is a text search. Just because a document contains the term “artificial intelligence” does not mean that the document is about artificial intelligence. All we know about the documents returned from the query above is that they contain one of our synonyms or abbreviations.

Let’s take a look at how vectors and a vector database perform semantic searches.

Once documents are transformed into vectors, the vectors and the original text are stored in a vector database. Vector databases facilitate semantic search. Let’s revisit our previous example and look at the difference between semantic search and a text search. A vector database can process a request like the one below. Behind the scenes, the phrase “artificial intelligence” is turned into a vector, and the vector database looks for other vectors with similar magnitude and direction. Once similar vectors are found, the vector database will return the text that the vector represents. This query is easier to create and will run much faster than our lexical text-based search. However, the most significant difference is that the results are documents about the topic or concept we are interested in.

Semantic search is a key capability that is needed to perform retrieval augmented generation (RAG). RAG enables organizations to unlock their proprietary knowledge, previously locked up in documents, for use in generative AI applications such as recommendation systems, chatbots, document summarization, contract generation, and more.

Now that we have seen vectors and vector databases in action, let’s look at a few additional considerations for using vector databases.

Storage requirements are primarily determined by the number of vectors, their dimensionality (i.e., the number of elements in each vector), and the data type used to represent them (e.g., float32, float16). Storage also depends on whether indexes are used for efficient search, as they can significantly increase space usage. Indexes, used for efficient similarity searches, can require significant storage, potentially three to four times the size of the raw vectors.

Example Calculation: Let's say you have 1 million 768-dimensional vectors, and each dimension is stored as a float32 (4 bytes).

Then we have:

Milvus - Milvus is an open-source vector database. Milvus utilizes MinIO as its primary storage solution for persisting raw vector data and index files.

LanceDB - LanceDB is an open-source vector database. It is built upon the Lance columnar data format, which is optimized for low-latency semantic searches. LanceDB utilizes MinIO as a reliable backend for storing the underlying Lance format files.

Weaviate - Weaviate is an open-source vector database. Weaviate leverages MinIO for backing up its indexes and data objects.

Because embeddings capture semantic meaning rather than just exact matches, vector databases enable new kinds of intelligent applications. Here are some of the most common use cases:

Best practices for vector databases depend upon your use case, the lifecycle of your data, the data type being embedded, and your preprocessing pipeline.