This post first appeared on The New Stack on April 18th, 2025.

Get a comprehensive summary of the major compute, networking, storage and partnership announcements from NVIDIA’s biggest event of the year.

If you follow the tech news, you have read a lot about NVIDIA and its graphics processing units (GPUs). However, it would be incorrect to conclude that NVIDIA is solely focused on GPUs. My biggest revelation from NVIDIA’s GTC 2025 conference last month was that NVIDIA innovates across compute, networking and storage. Most of these innovations are all about AI, but gamers should not be concerned; there is a new RTX chip for you.

The new announcements and key technologies that were the spotlight of CEO Jensen Huang’s keynote presentation highlight what NVIDIA is doing across : compute, networking, storage and its partnerships. NVIDIA also wants an offering for AI practitioners of all sizes: engineers doing experiments on their desktops; enterprises running AI infrastructure (which NVIDIA sees as a $500 billion opportunity); hyperscalers that need specialized photonic-based networking equipment; and finally, organizations pushing the boundaries of physical AI with robotics.

Let’s start with compute, since that is what put NVIDIA on the map with its first gaming GPUs.

The GeForce RTX 5090 will be the new high-end desktop GPU for gamers and creative professionals. (Did you know that RTX stands for Ray Tracing Texel Extreme? It is a technique used in gaming to simulate realistic lighting and shadows.) Gamers will benefit from the improved latency of ray tracing. It is based on the NVIDIA Blackwell architecture and has 32GB of high-speed GDDR7 (Graphics Double Data Rate 7) memory and 3.4 petaflops of FP4 compute. Compared to the RTX 4090, it is 30% smaller and 30% better at dissipating heat. The bad news is that this GPU has a $1,999 price tag. If you are an AI/ML engineer, you may be tempted to use it for AI experiments and workloads, but before you do, read about DGX Spark (below).

NVIDIA CUDA-X is not a new announcement; it has been an ongoing effort for a while. CUDA-X is a collection of optimized libraries built on CUDA to accelerate AI, high-performance computing (HPC) and data science workloads. These libraries are designed to help developers take full advantage of NVIDIA GPUs for a wide range of applications without needing to write low-level GPU code. Many of these libraries are drop-in replacements for existing libraries that run on a CPU. For example, the cuDF library can be used to replace Pandas and Polars with zero code changes. A full list of the libraries presented are:

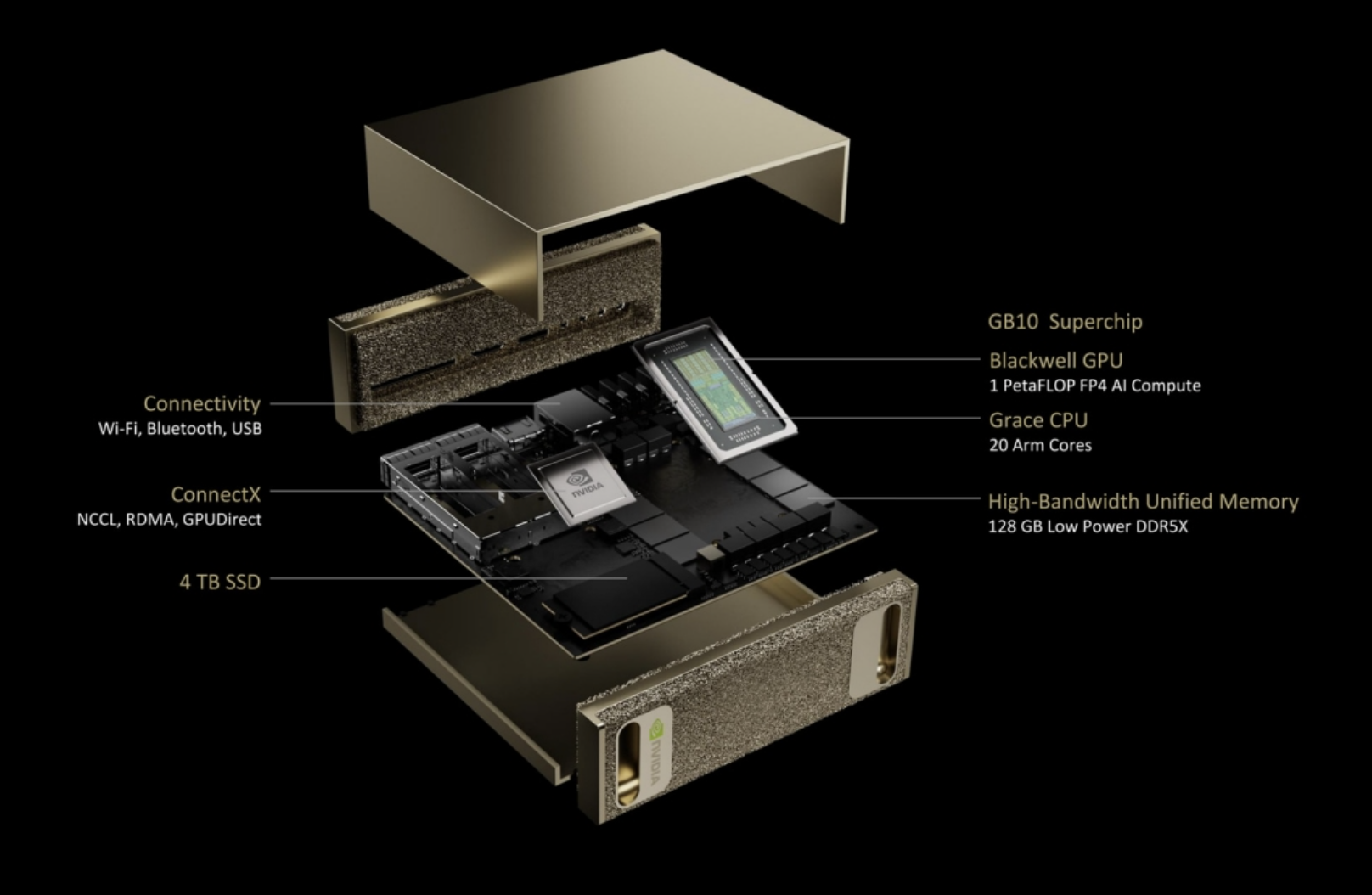

NVIDIA's DGX Spark, previously introduced as Project DIGITS at CES 2025 in January, is a desktop computer designed to deliver computational power beyond that found in gaming systems. DGX Spark is tailored for AI developers, researchers and data scientists who need to prototype, fine-tune and deploy large AI models locally without relying solely on cloud or data center resources. Specifications:

ASUS will offer a model called the ASUS Ascent GX10 with 1TB of storage for $2,999. If you want more storage, you can buy directly from NVIDIA and get the DGX Spark with 4TB of storage for $3,999. Finally, if you wish to build a mini AI factory, you can buy two DGX Sparks from NVIDIA with a connecting cable for $8,049.

Source: https://www.nvidia.com/en-us/products/workstations/dgx-spark/

DGX Station is also a desktop computer for AI professionals. It will be much more powerful than DGX Spark and available only through partners. NVIDIA will not make its own version of this desktop computer. Pricing information is not available. However, its initial specifications indicate that it will be costly. Here are the specifications that NVIDIA has released to date:

The Blackwell GPU is in full production, and orders from the top four cloud service providers (Oracle, Google, Microsoft and Amazon) have already passed the peak year of sales for the Hopper GPUs. In 2024, NVIDIA sold 1.3 million Hopper GPUs, and so far in 2025, there are 3.6 million orders for Blackwell. Additionally, GB200 NVL72 running Dynamo will be 40 times better than Hopper for inference.

The next generation of data center GPUs will be based on the Rubin architecture, named after Vera Rubin, an American astronomer who discovered dark matter. It promises to be 2.5 times faster than its predecessor. It will come with 288GB of HDM4 memory.

The Blackwell GB200 NVL72 is currently available. It connects 36 Grace CPUs and 72 Blackwell GPUs in a single rack. This allows these 72 GPUs to act as a single giant GPU — what NVIDIA calls the ultimate scale-up. Such a system is capable of hosting trillion-parameter large language models (LLMs).

The Blackwell Ultra NVLink-72 will be available in the second half of 2025. It promises 1.5 times the performance of the GB200 NVL72. There are also improvements to the instruction set for LLMs (attention instructions), and there is 1.5 times more memory.

The Vera Rubin rack configuration will be available in the second half of 2026. It will connect 88 Vera CPUs using NVLink-C2C and 144 Rubin GPUs using NVLink6. Pretty much everything is new in this design except for the chassis, which will make upgrades easier.

The Vera Rubin Ultra should be available in the second half of 2027. The CPUs will be the same, but this rack will contain 576 Rubin Ultra GPUs connected using NVLink7. It promises to be 14 times faster than the GB300 NVL72.

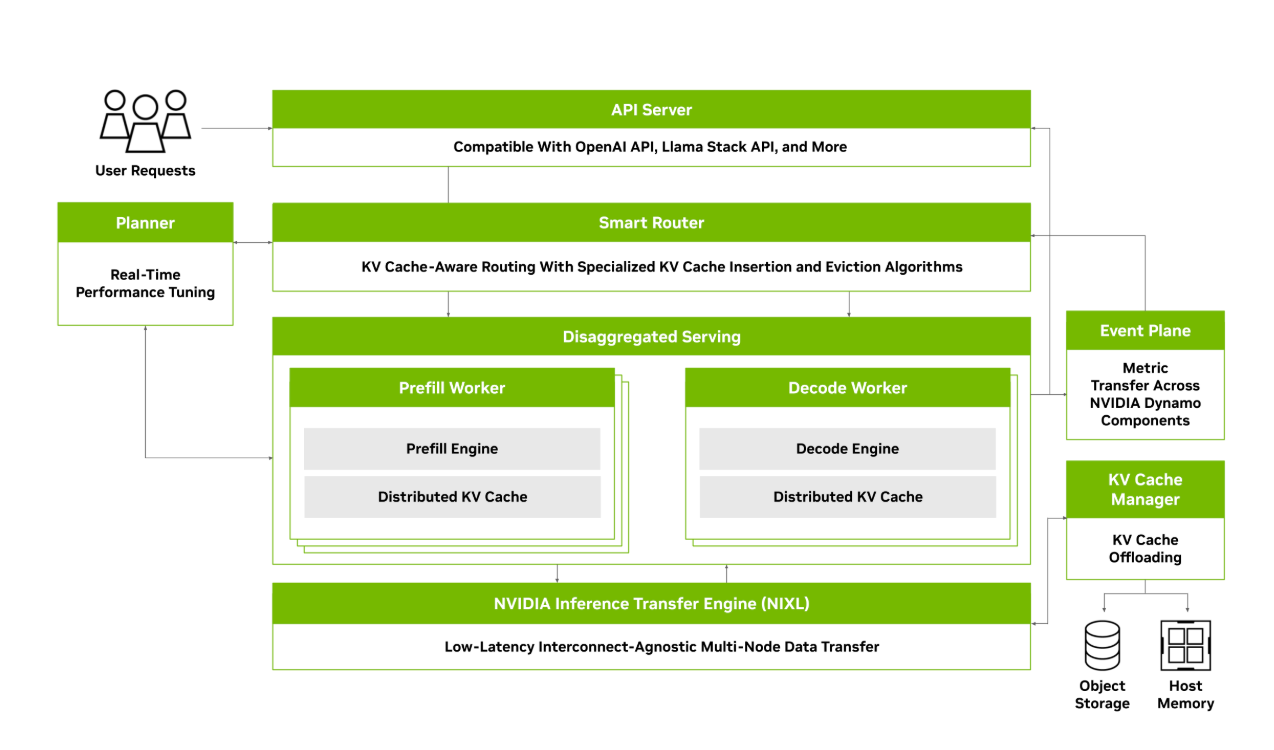

The best way to host a trillion-parameter LLM is to distribute it across multiple GPUs linked together using NVL72. NVIDIA refers to this technique as disaggregated serving. However, if you split an LLM across GPUs, you do not want to do it randomly. Instead, you want to split the LLM in a way that minimizes intraprocess communication. You also want to be able to scale each portion of the LLM separately. (The same way you can scale different services within an application separately using a microservices architecture.)

To address the challenges of distributed and disaggregated inference, NVIDIA created Dynamo, an open source inference framework. It provides the following capabilities:

Spectrum-X represents NVIDIA’s scale-out story. A lot of people were surprised to see NVIDIA get into the Ethernet world, but it wanted to help Ethernet become more like InfiniBand. (InfiniBand is usually used for high-performance computing, whereas Ethernet is best for all-purpose networking, such as connecting devices.) So, it invested in Spectrum-X, which is composed of a Spectrum-X SuperNIC and a Spectrum-X Ethernet switch, and gave it the capabilities that InfiniBand contains.

All this high-speed compute and networking requires high-speed storage to power it. With this purpose in mind, MinIO partnered with NVIDIA to integrate NVIDIA GPUDirect Storage, NVIDIA BlueField-3, NVIDIA NIM and NVIDIA’s GPU Operator with AIStor. These new features and integrations are open to MinIO AIStor beta customers under private preview.

AIStor’s PromptObject transforms how users interact with stored objects by allowing them to ask questions about their data's content and extract information using natural language — eliminating the need to write complex queries or code. The NVIDIA GPU Operator is built on the Kubernetes Operator Framework and provides a comprehensive automation solution for GPU management. The integration of AIStor with the NVIDIA GPU Operator will allow organizations to easily set up a GPU-based cluster for use with AIStor’s PromptObject API.

MinIO is adding NVIDIA NIM microservices so that AIStor customers that wish to deploy PromptObject using NIM will be able to do so directly from AIStor’s Global Console.

The forthcoming integration of NVIDIA GPUDirect Storage (GDS) with MinIO AIStor is a co-engineered solution that will allow objects from AIStor to be sent directly to GPU memory without using the CPU’s memory as a bounce buffer, which is the technique that must be employed today.

The NVIDIA BlueField-3 (BF3) DPU has 16 ARM cores, 400Gb/s Ethernet or InfiniBand networking, and hardware accelerators for tasks like encryption, compression and erasure coding. AIStor’s small binary size (~100MB) makes AIStor an ideal candidate for native deployment on BF3 DPUs, where resource constraints demand lightweight yet powerful software.

AIStor, deployed on the BF3 DPU, will provide enterprises with a platform that integrates seamlessly with NVIDIA’s Spectrum-X networking architecture. This will deliver the low-latency, high-bandwidth performance required for AI environments and will ensure data transfers that can feed hungry GPUs. Deploying AIStor on BF3 DPUs will also allow customers to easily leverage GPU Direct Storage (GDS) capabilities.

A key part of GM’s partnership with NVIDIA on its self-driving fleet will be NVIDIA Halos, a full-stack comprehensive safety system that unifies vehicle architecture, AI models, chips, software, tools and services to ensure the safe development of autonomous vehicles (AVs) from cloud to car.

The system ensures safety across the full development life cycle with guardrails at design, deployment and validation times that collectively build safety and explainability into AI-based AV stacks. These guardrails are implemented using three computers: NVIDIA DGX for training, NVIDIA Omniverse and Cosmos for simulation, and NVIDIA AGX for deployment.

NVIDIA announced its Photonics Switch System for data centers the size of football fields. When a data center is this large, signals cannot be efficiently transmitted with copper. This collaboration with many ecosystem organizations will result in the Quantum-X Photonic Switch being available in the second half of 2025, and the Spectrum-C Photonics Switch in the second half of 2026.

NVIDIA sees physical AI and robotics as the next frontier of AI. Consequently, it partnered with Disney Research and Google DeepMind on physical AI. The partnership is called Project Newton and will pursue an advanced physics engine. Project Newton also pioneered the GR00T N1 foundation model, which features a dual-system architecture inspired by principles of human cognition. The first prototype, “System 1” is a fast-thinking action model, mirroring human reflexes or intuition. “System 2” is a slow-thinking model for deliberate, methodical decision-making. GROOT N1 will be open sourced.

An NVIDIA partnership with Cisco, T-Mobile and ODC, a Cerberus Capital Management portfolio company,) will develop a full-stack AI-based wireless network including hardware, software and architecture for 6G, explicitly focusing on enabling edge AI applications.

Cisco will integrate Spectrum-X into its products.

NVIDIA announced new technologies and partnerships at its March event that span compute, networking and storage. A quick numerical recap is as follows:

Compute: Six new offerings for providing raw compute for desktop systems, analytics engines and GPU architectures.

Networking: Six announcements related to networking GPUs together. These launches include networking of GPUs within a rack and the networking technologies needed by hyperscalers (or organizations wishing to imitate hyperscalers) where the physical size of the data center introduces challenges.

Storage: MinIO and NVIDIA have been working closely to make sure storage can keep up with networking and compute. MinIO recently announced future support for four NVIDIA technologies: GPUDirect Storage, NIM, BlueField3 and the GPU Operator.

Other Partnerships: Five products that are a result of additional partnerships with GM, Cisco, Disney Research, T-Mobile, Google DeepMind, ODC, and an ecosystem of partners working on photonics switches.